DeepSpeed Compression: A composable library for extreme

$ 6.99 · 5 (538) · In stock

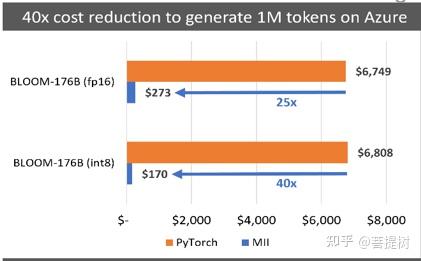

Large-scale models are revolutionizing deep learning and AI research, driving major improvements in language understanding, generating creative texts, multi-lingual translation and many more. But despite their remarkable capabilities, the models’ large size creates latency and cost constraints that hinder the deployment of applications on top of them. In particular, increased inference time and memory consumption […]

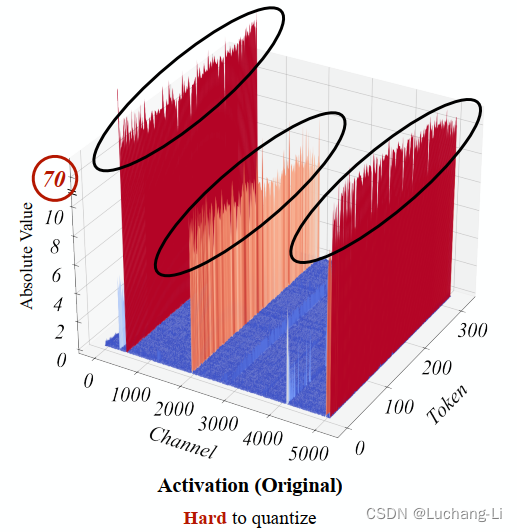

ZeroQuant与SmoothQuant量化总结-CSDN博客

PDF] DeepSpeed- Inference: Enabling Efficient Inference of Transformer Models at Unprecedented Scale

PDF] DeepSpeed- Inference: Enabling Efficient Inference of Transformer Models at Unprecedented Scale

Practicing Trustworthy Machine Learning: Consistent, Transparent, and Fair AI Pipelines [1 ed.] 1098120272, 9781098120276

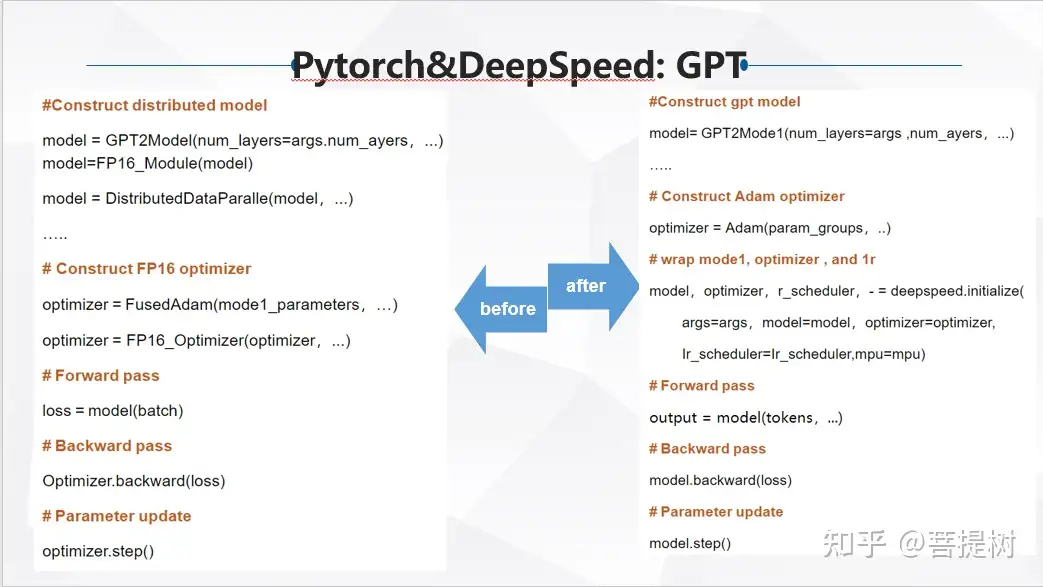

如何评价微软开源的分布式训练框架deepspeed? - 知乎

Microsoft's Open Sourced a New Library for Extreme Compression of Deep Learning Models, by Jesus Rodriguez

Shaden Smith on LinkedIn: DeepSpeed Data Efficiency: A composable library that makes better use of…

ChatGPT只是前菜,2023要来更大的! - 墨天轮

Shaden Smith on LinkedIn: dfasdf

Interpreting Models – Machine Learning

如何评价微软开源的分布式训练框架deepspeed? - 菩提树的回答- 知乎

PDF) DeepSpeed Data Efficiency: Improving Deep Learning Model Quality and Training Efficiency via Efficient Data Sampling and Routing

Practicing Trustworthy Machine Learning: Consistent, Transparent, and Fair AI Pipelines [1 ed.] 1098120272, 9781098120276

如何评价微软开源的分布式训练框架deepspeed? - 菩提树的回答- 知乎

DeepSpeed Compression: A composable library for extreme compression and zero-cost quantization - Microsoft Research