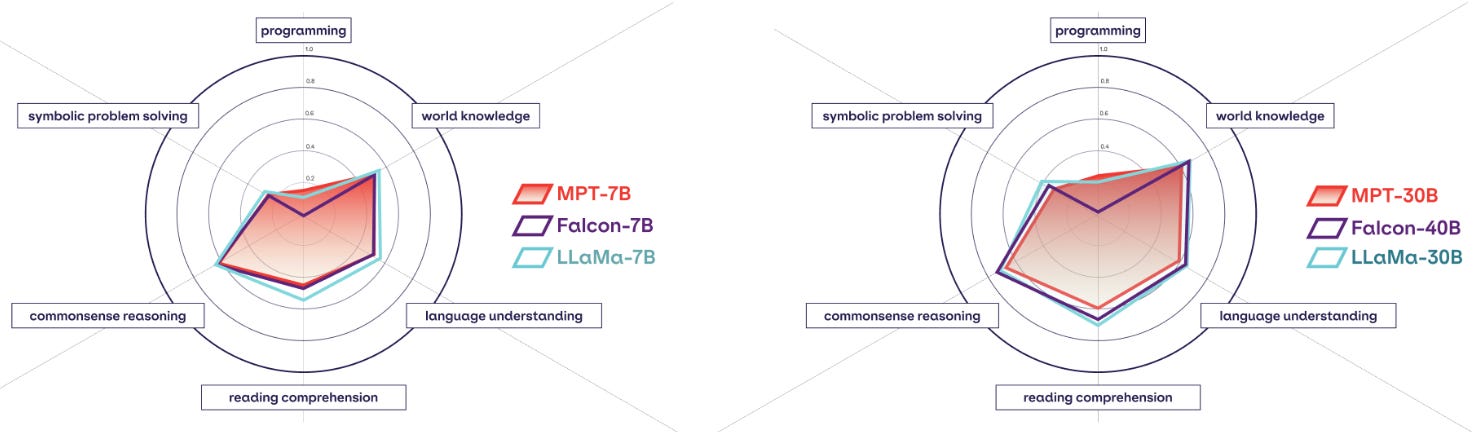

MPT-30B: Raising the bar for open-source foundation models

$ 8.00 · 4.7 (333) · In stock

Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

Applied Sciences October-2 2023 - Browse Articles

MPT-30B: Raising the bar for open-source foundation models

Stardog: Customer Spotlight

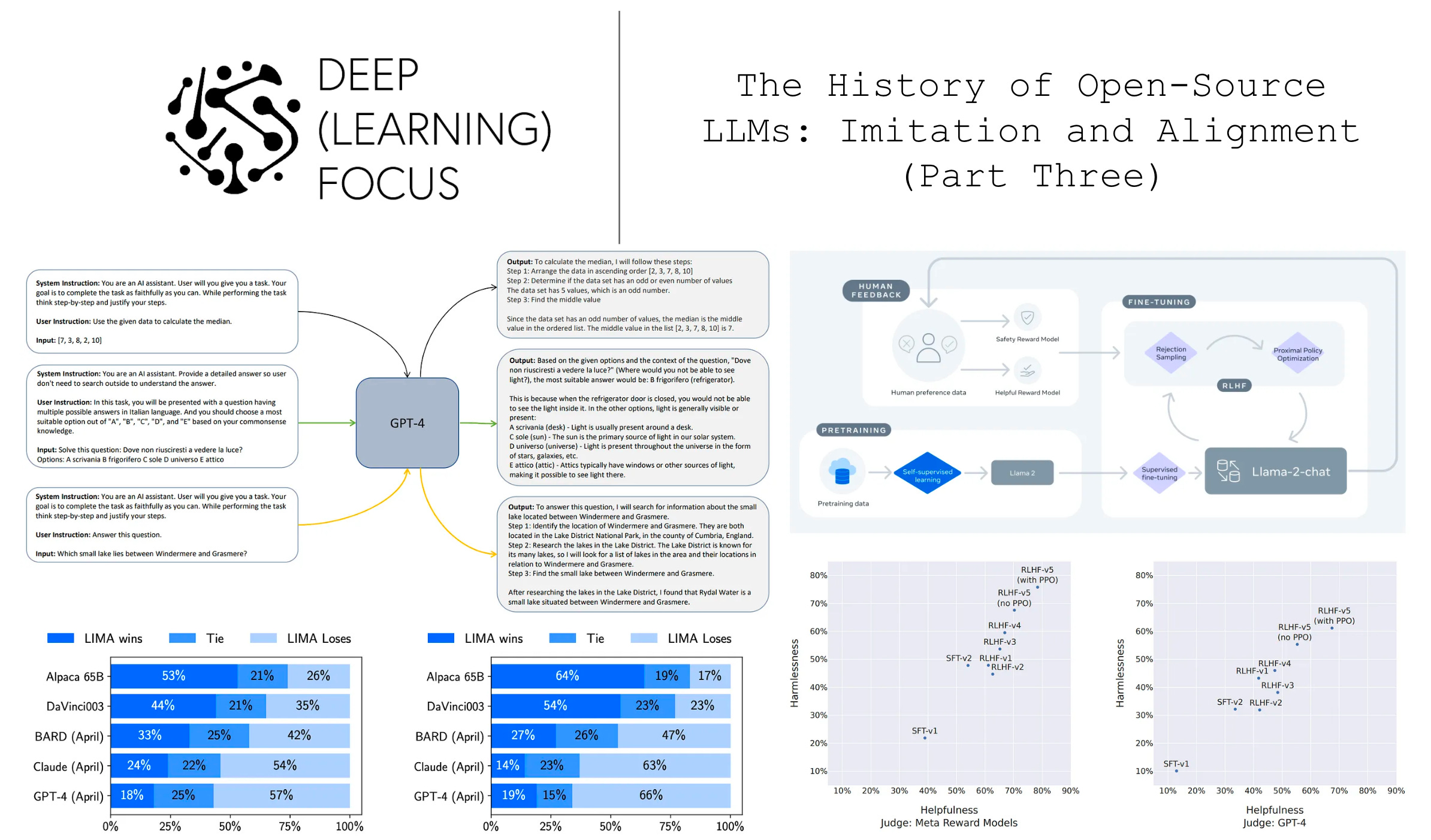

The History of Open-Source LLMs: Imitation and Alignment (Part Three)

February 2002 - National Conference of Bar Examiners

12 Open Source LLMs to Watch

MosaicML, now part of Databricks! on X: MPT-30B is a bigger sibling of MPT-7B, which we released a few weeks ago. The model arch is the same, the data mix is a

MPT-30B-Instruct(MosaicML Pretrained Transformer - 30B Instruct)详细信息, 名称、简介、使用方法,开源情况,商用授权信息

Ashish Patel 🇮🇳 on LinkedIn: #llms #machinelearning #data #analytics #datascience #deeplearning…

The History of Open-Source LLMs: Better Base Models (Part Two)

maddes8cht/mosaicml-mpt-30b-instruct-gguf · Hugging Face

MPT-30B: Raising the bar for open-source foundation models