DistributedDataParallel non-floating point dtype parameter with requires_grad=False · Issue #32018 · pytorch/pytorch · GitHub

$ 18.99 · 4.5 (260) · In stock

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

Data Parallel / Distributed Data Parallel not working on Ampere

parameters() is empty in forward when using DataParallel · Issue

Achieving FP32 Accuracy for INT8 Inference Using Quantization

Cannot update part of the parameters in DistributedDataParallel

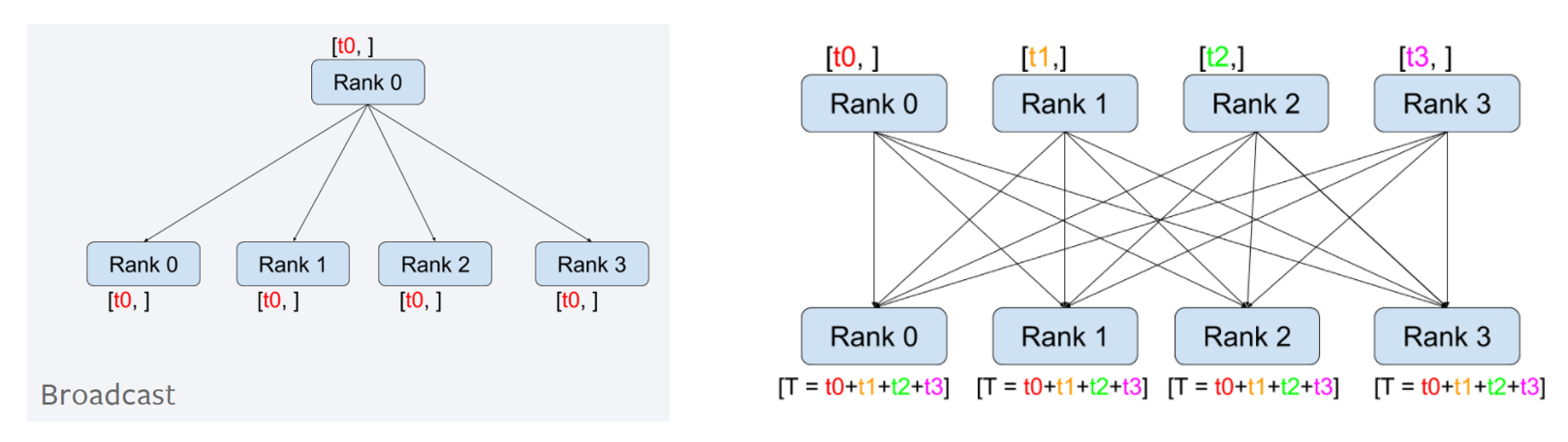

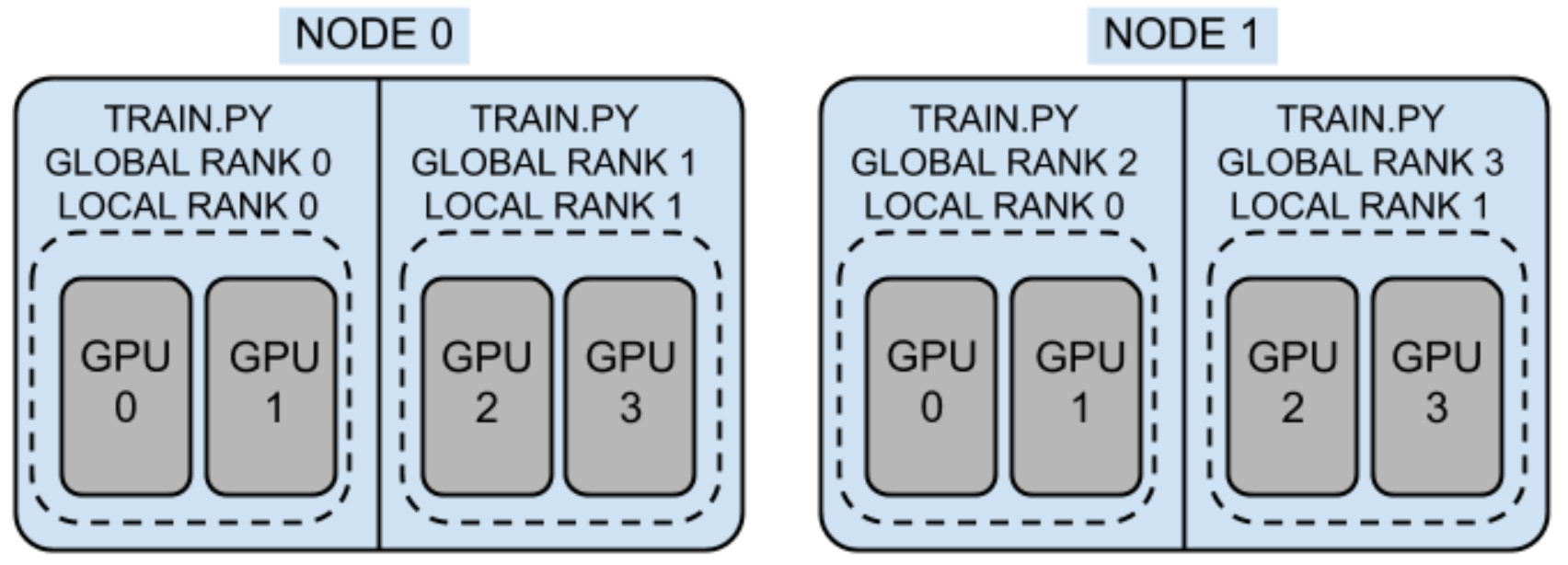

Pytorch - DistributedDataParallel (2) - 동작 원리

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

Pytorch - DistributedDataParallel (1) - 개요

Torch 2.1 compile + FSDP (mixed precision) + LlamaForCausalLM

modules/data_parallel.py at master · RobertCsordas/modules · GitHub

AzureML-BERT/pretrain/PyTorch/distributed_apex.py at master