How to Measure FLOP/s for Neural Networks Empirically? – Epoch

$ 15.99 · 5 (349) · In stock

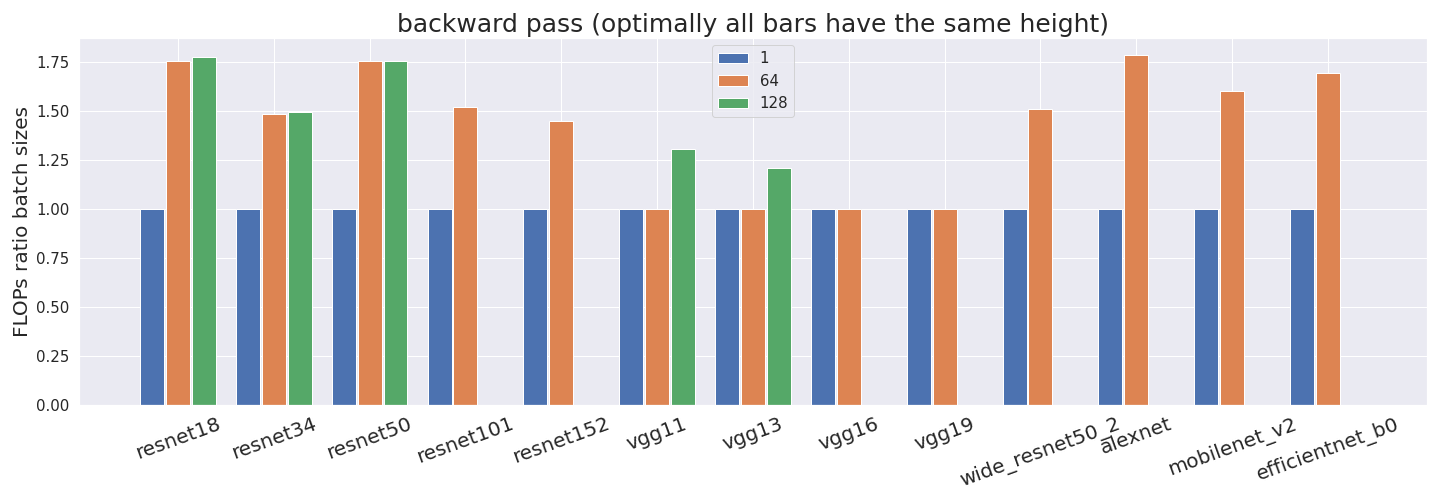

Computing the utilization rate for multiple Neural Network architectures.

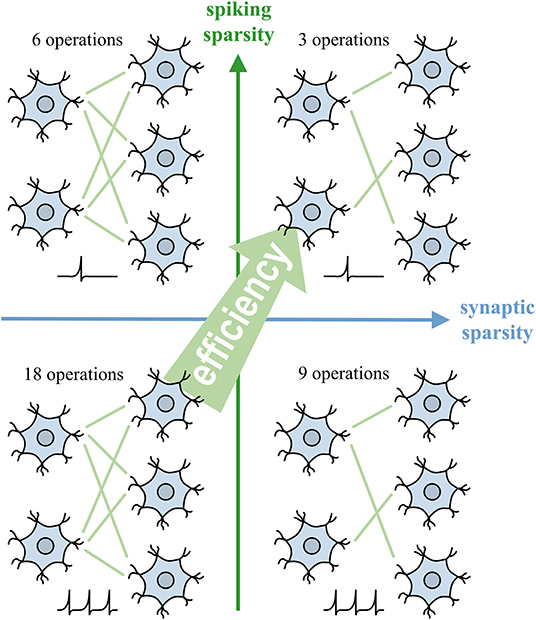

Frontiers Backpropagation With Sparsity Regularization for Spiking Neural Network Learning

How to measure FLOP/s for Neural Networks empirically? — LessWrong

The comparison between normalizing importances by FLOPs and memory.

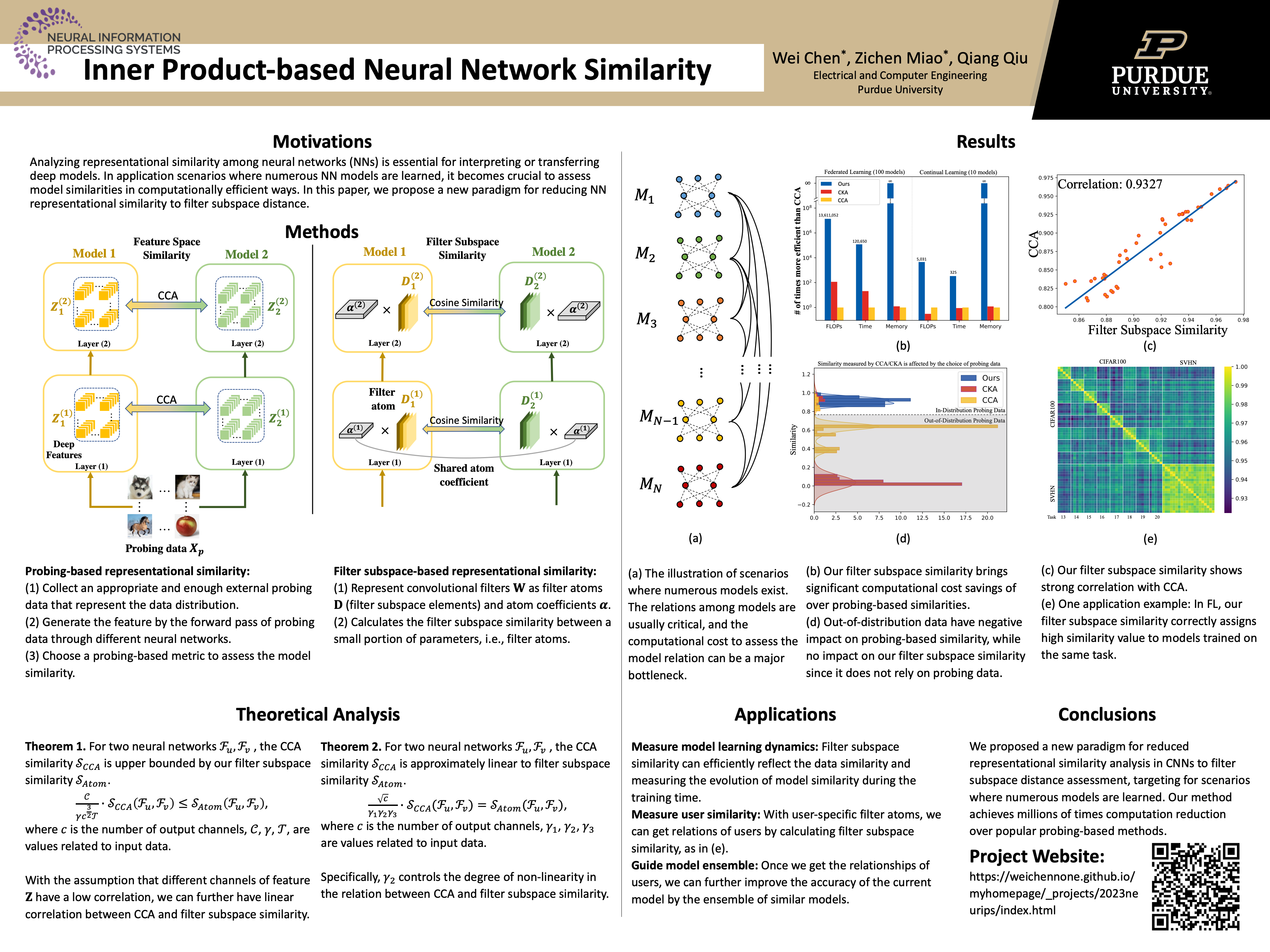

NeurIPS 2023

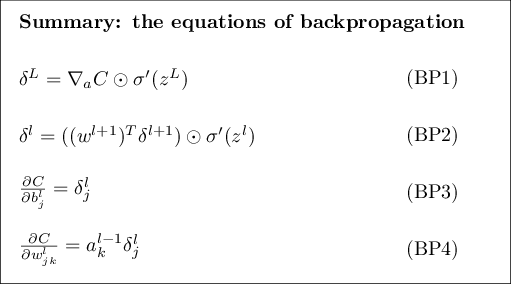

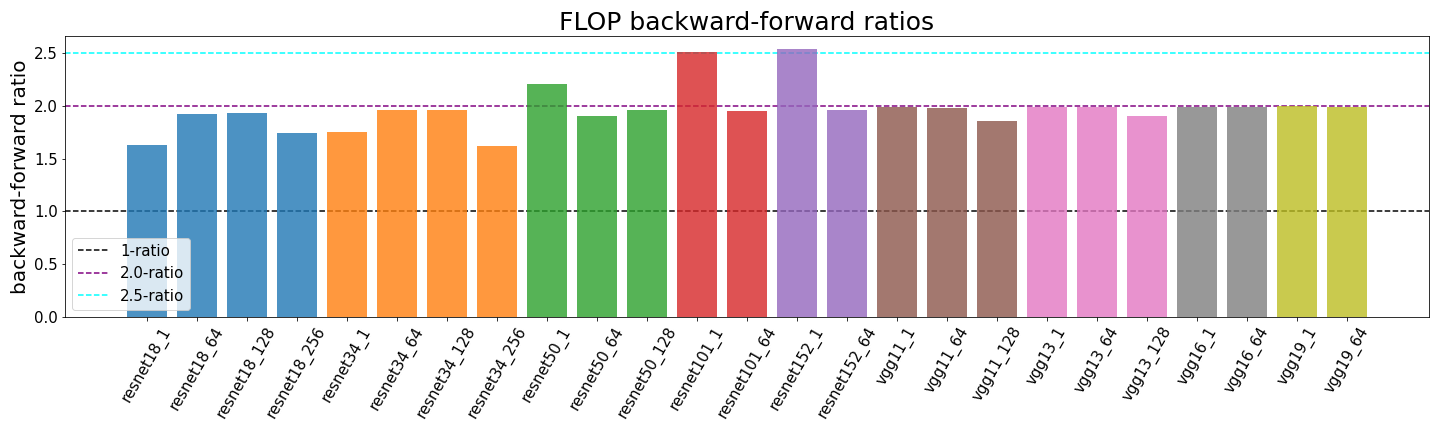

What's the Backward-Forward FLOP Ratio for Neural Networks? – Epoch

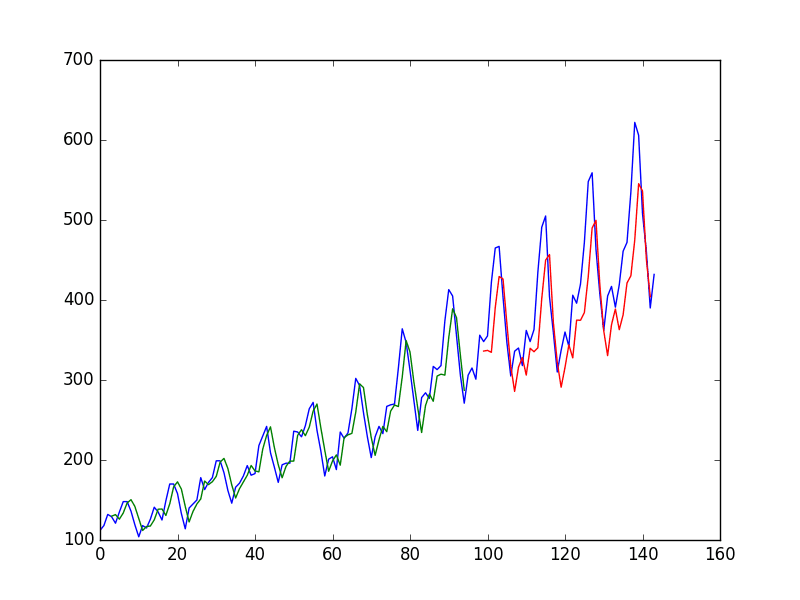

Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras

Sensors, Free Full-Text

Mathematics, Free Full-Text

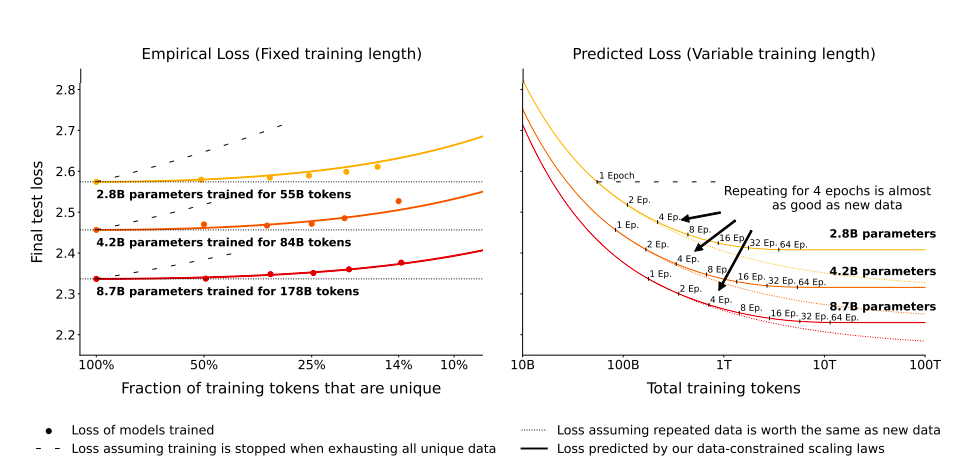

Papers Explained 85: Scaling Data-Constrained Language Models, by Ritvik Rastogi

CoAxNN: Optimizing on-device deep learning with conditional approximate neural networks - ScienceDirect

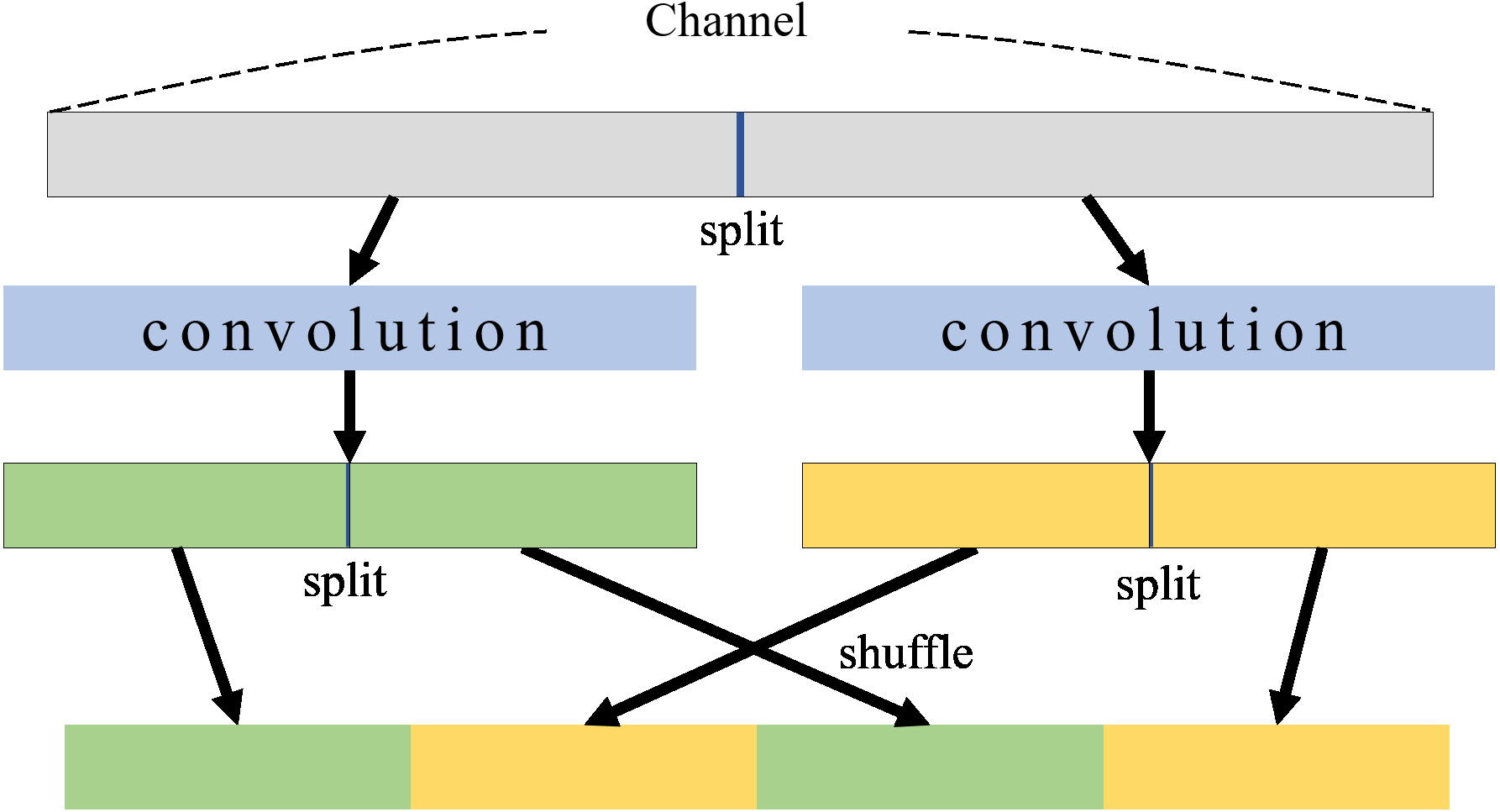

PresB-Net: parametric binarized neural network with learnable activations and shuffled grouped convolution [PeerJ]